regularization function

-

Eunho Yang and Aurelie Lozano and Pradeep Ravikumar

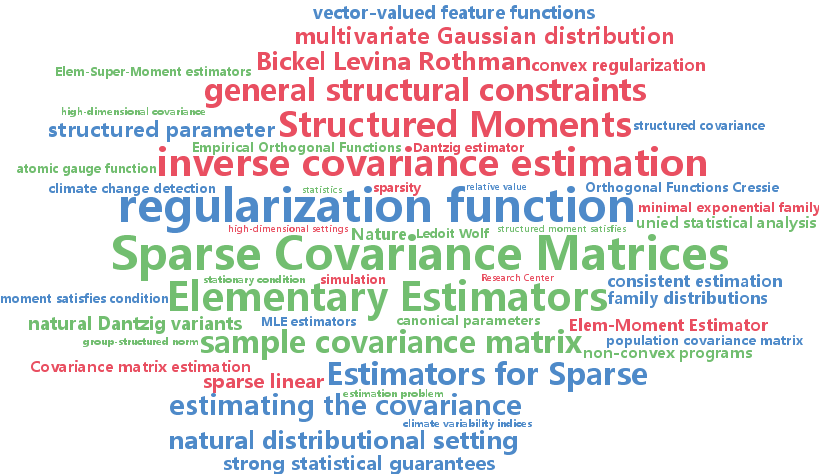

Elementary Estimators for Sparse Covariance Matrices and other Structured Moments (pdf)

We consider the problem of estimating distributional parameters that are expected values of given feature functions. We are interested in recovery under high-dimensional regimes, where the number of variables $p$ is potentially larger than the number of samples $n$, and where we need to impose structural constraints upon the parameters. In a natural distributional setting for this problem, the feature functions comprise the sufficient statistics of an exponential family, so that the problem would entail estimating structured moments of exponential family distributions. A special case of the above involves estimating the covariance matrix of a random vector, and where the natural distributional setting would correspond to the multivariate Gaussian distribution. Unlike the inverse covariance estimation case, we show that the regularized MLEs for covariance estimation, as well as natural Dantzig variants, are \emph{non-convex

-

Timothy Mann and Daniel Mankowitz and Shie Mannor

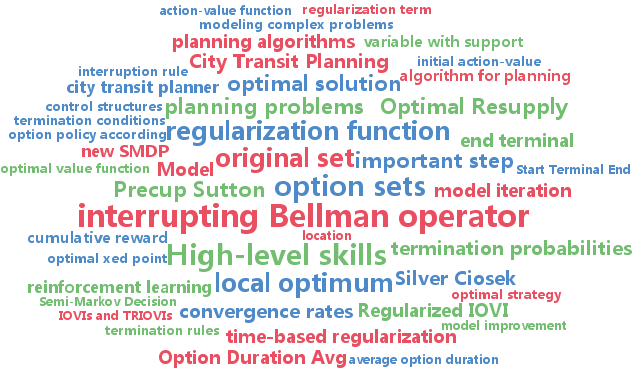

Time-Regularized Interrupting Options (TRIO) (pdf)

High-level skills relieve planning algorithms from low-level details. But when the skills are poorly designed for the domain, the resulting plan may be severely suboptimal. Sutton et al. 1999 made an important step towards resolving this problem by introducing a rule that automatically improves a set of skills called options. This rule terminates an option early whenever switching to another option gives a higher value than continuing with the current option. However, they only analyzed the case where the improvement rule is applied once. We show conditions where this rule converges to the optimal set of options. A new Bellman-like operator that simultaneously improves the set of options is at the core of our analysis. One problem with the update rule is that it tends to favor lower-level skills. Therefore we introduce a regularization term that favors longer duration skills. Experimental results demonstrate that this approach can derive a good set of high-level skills even when the original set of skills cannot solve the problem.

-

Mohamad Ali Torkamani and Daniel Lowd

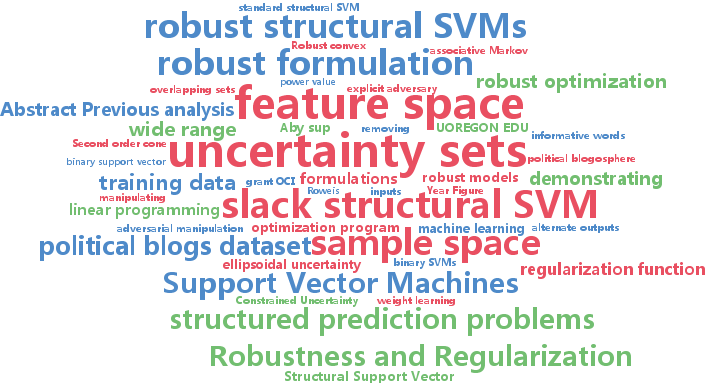

On Robustness and Regularization of Structural Support Vector Machines (pdf)

Previous analysis of binary SVMs has demonstrated a deep connection between robustness to perturbations over uncertainty sets and regularization of the weights. In this paper, we explore the problem of learning robust models for structured prediction problems. We first formulate the problem of learning robust structural SVMs when there are perturbations in the feature space. We consider two different classes of uncertainty sets for the perturbations: ellipsoidal uncertainty sets and polyhedral uncertainty sets. In both cases, we show that the robust optimization problem is equivalent to the non-robust formulation with an additional regularizer. For the ellipsoidal uncertainty set, the additional regularizer is based on the dual norm of the norm that constrains the ellipsoidal uncertainty. For the polyhedral uncertainty set, we show that the robust optimization problem is equivalent to adding a linear regularizer in a transformed weight space related to the linear constraints of the polyhedron. We also show that these constraint sets can be combined and demonstrate a number of interesting special cases. This represents the first theoretical analysis of robust optimization of structural support vector machines. Our experimental results show that our method outperforms the nonrobust structural SVMs on real world data when the test data distributions is drifted from the training data distribution.